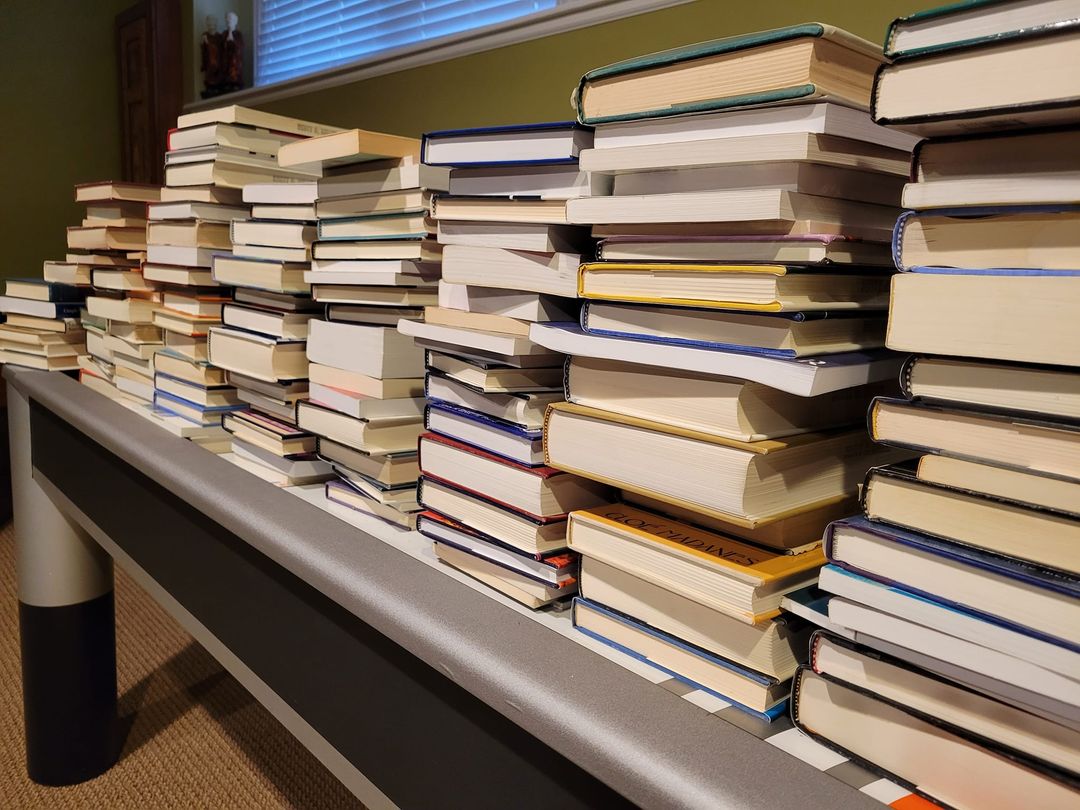

Late last year, I began a project I’d been putting off for a long while: culling my professional books. I had thousands. They filled the shelves in both my office and home. To be sure, I did not collect for the sake of collecting. Each had been important to me at some time, served some purpose, be it a research or professional development project — or so I thought.

I contacted several local bookstores. I live in Chicago — a big city with many interesting shops and loads of clinicians. I also posted on social media. “Surely,” I was convinced, “someone would be interested.” After all, many were classics and more than a few had been signed by the authors.

I wish I had taken a selfie when the manager of one store told me, “These are pretty much worthless.” And no, they would not take them in trade or as a donation. “We’d just put them in the dumpster out back anyway,” they said with a laugh, “no one is interested.”

Honestly, I was floored. I couldn’t even give the books away!

The experience gave me pause. However, over a period of several months, and after much reflection, I gradually (and grudgingly) began to agree with the manager’s assessment. The truth was very few — maybe 10 to 20 — had been transformative, becoming the reference works I returned to time and again for both understanding and direction in my professional career.

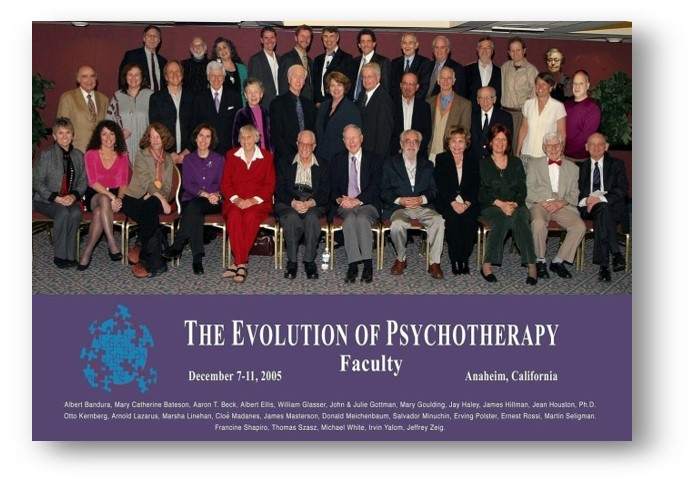

Among that small group, one volume clearly stands out. A book I’ve considered my “secret source” of knowledge about psychotherapy, The Handbook of Psychotherapy and Behavior Change. Beginning in the 1970’s, every edition has contained the most comprehensive, non-ideological, scientifically literate review of “what works” in our field.

Why secret? Because so few practitioners have ever heard of it, much less read it. Together with my colleague Dr. Dan Lewis, we review the most current, 50th anniversary edition. We also cover Ghost Hunter, a book about William James’ investigation of psychics and mediums.

What do these two books have in common? In a word, “science.” Don’t take my word for it, however. Listen to the podcast or video yourself!

Until next time, all the best!

Scott

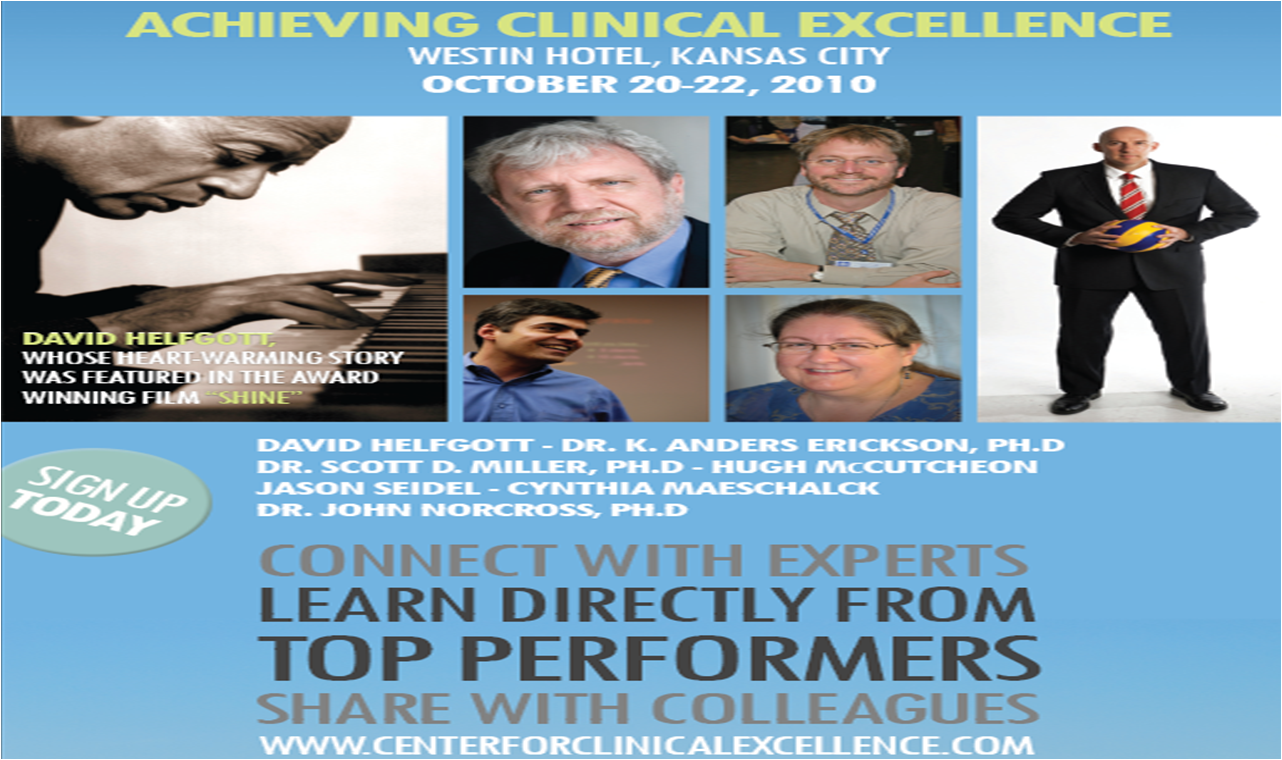

Scott D. Miller, Ph.D.

Director, International Center for Clinical Excellence

ing Psychology.

ing Psychology.

e boundary between “belief in the process” and “denial of reality” is remarkably fuzzy. Hope is a significant contributor to outcome—accounting for as much as 30% of the variance in results. At the same time, it becomes toxic when actual outcomes are distorted in a manner that causes practitioners to miss important opportunities to grow and develop—not to mention help more clients.

e boundary between “belief in the process” and “denial of reality” is remarkably fuzzy. Hope is a significant contributor to outcome—accounting for as much as 30% of the variance in results. At the same time, it becomes toxic when actual outcomes are distorted in a manner that causes practitioners to miss important opportunities to grow and develop—not to mention help more clients.

.jpg)

.jpg)