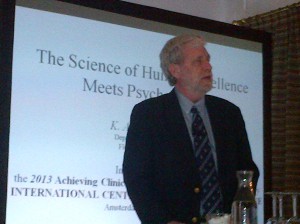

My how time flies! Nearly three weeks have passed since hundreds of clinicians, researchers, and educators met in Amsterdam, Holland for the 2013 “Achieving Clinical Excellence.” Participants came from around the globe–Holland, the US, Germany, Denmark, Italy, Russia, Norway, Sweden, Denmark, New Zealand, Romania, Australia, France–for three days of presentations on improving the quality and outcome of behavioral healthcare. Suffice it to say, we had a blast!

The conference organizers, Dr. Liz Pluut and Danish psychologist Susanne Bargmann did a fantastic job planning the event, organizing a beautiful venue (the same building where the plans for New York City were drafted back in the 17th century), coordinating speakers (36 from around the globe), arranging meals, hotel rooms, and handouts.

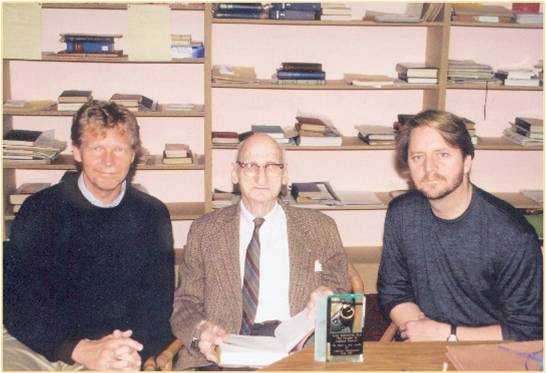

Dr. Pluut opened the conference and introduced the opening plenary speaker, Dr. K. Anders Ericsson, the world’s leading researcher and “expert on expertise.” Virtually all of the work being done by me and my colleagues at the ICCE on the study of excellence and expertise among therapists is based on the three decades of pioneering work done by Dr. Ericsson. You can read about our work, of course, in several recent articles: Supershrinks, The Road to Mastery, or the latest The Outcome of Psychotherapy: Past, Present and Future (which appeared in the 50th anniversary edition of the journal, Psychotherapy).

Over the next several weeks, I’ll be posting summaries and videos of many of the presentations, including Dr. Ericsson. One key aspect of his work is the idea of “Deliberate Practice.” Each of the afternoon sessions on the first day focused on this important topic, describing how clinicians, agency managers, and systems of care can apply it to improve their skills and outcome.

The first of these presentations was by psychologist Birgit Valla–the leader of Family Help, a mental health agency in Stange, Norway–entitled, “Unreflectingly Bad or Deliberately Good: Deciding the Future of Mental Health Services.” Grab a cup of coffee and listen in…

Oh, yeah…while on the subject of excellence, here’s an interview that just appeared in the latest issue of the UK’s Therapy Today magazine:

.jpg)

.jpg)

.png)

.jpg)

.jpg)

.jpg)

.jpg)

.gif)

.jpg)

.jpg)

.jpg) Researchers

Researchers