It’s one of my favorite lines from one of my all time favorite films. Civilian Ellen Ripley (Sigourney Weaver) accompanies a troop of “colonial marines” to LV-426. Contact with the people living and working on the distant exomoon has been lost. A formidable life form is suspected. The Alien. Ripley is on board as an advisor. The only person that’s ever met the creature. The lone survivor of a ship whose crew was decimated hours after first contact.

It’s one of my favorite lines from one of my all time favorite films. Civilian Ellen Ripley (Sigourney Weaver) accompanies a troop of “colonial marines” to LV-426. Contact with the people living and working on the distant exomoon has been lost. A formidable life form is suspected. The Alien. Ripley is on board as an advisor. The only person that’s ever met the creature. The lone survivor of a ship whose crew was decimated hours after first contact.

On arrival, Ripley briefs the team. Her description and warnings are met with a mixture of determination and derision by the tough, experienced, highly-trained, and well-equipped soldiers. On touch down, the group immediately jumps into action. First contact does not go well. Confidence quickly gives way to chaos and confusion. Not only do many die, but the actions they take to defend themselves inadvertently damages a nuclear reactor.

If Ripley and the small group that remains hope to survive, they must get off the planet as soon as possible. With senior leaders out of commission,  command decisions fall to a lowly corporal named, Dwayne Hicks. His team is tired and facing overwhelming odds. It’s then he utters the line. “Hey, listen,” he says, “We’re all in strung out shape, but stay frosty, and alert …”.

command decisions fall to a lowly corporal named, Dwayne Hicks. His team is tired and facing overwhelming odds. It’s then he utters the line. “Hey, listen,” he says, “We’re all in strung out shape, but stay frosty, and alert …”.

Stay frosty and alert.

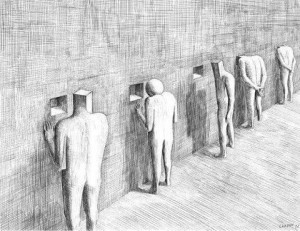

Sage counsel –advice which, had it been heeded from the very outset of the journey, would likely have changed the course of events — but also exceedingly difficult to do. Sounds. Smells. Flavors. Touch. Motion. Attention. Most behaviors. Once we become accustomed to them, they disappear from consciousness.

Said another way, experience dulls the senses. Except when it doesn’t. Turns out, some are less prone to habituation.

In his study of highly effective psychotherapists, for example, my colleague Dr. Daryl Chow (2014), found, “the number of times therapists were surprised by clients’ feedback … was … a significant predictor of client outcome” (p. xiii). Turns out, highly effective therapists frequently see something important in what average practitioners conclude is simply, “more of the same.” It should come as no surprise then that a large body of evidence finds no correlation between therapist effectiveness and their age, training, professional degree or certification, case load, or amount of clinical experience (1, 2).

Staying “frosty and alert” is the subject of Episode 5 of The Book Case Podcast. Together with my colleague, Dr. Dan Lewis, we review 3 new books, each organized around overcoming the natural human tendency to develop attentional biases and blind spots. Be sure and leave a comment after listening.

Until next time,

Scott

Scott D. Miller, Ph.D.

Director, International Center for Clinical Excellence

P.S.: As the Spring workshops in feedback-informed treatment (FIT) are sold out, registration is now open for the Summer 2022 events.

associated with self-assessment, we actively work to limit information that conflicts with how we prefer to see ourselves (e.g., capable versus incompetent, perceptive versus obtuse, intuitive versus plodding, effective versus ineffective, etc.).

associated with self-assessment, we actively work to limit information that conflicts with how we prefer to see ourselves (e.g., capable versus incompetent, perceptive versus obtuse, intuitive versus plodding, effective versus ineffective, etc.).

ave not.

ave not.

ing Psychology.

ing Psychology.

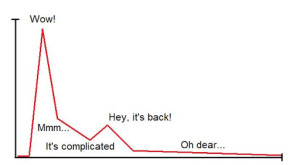

e boundary between “belief in the process” and “denial of reality” is remarkably fuzzy. Hope is a significant contributor to outcome—accounting for as much as 30% of the variance in results. At the same time, it becomes toxic when actual outcomes are distorted in a manner that causes practitioners to miss important opportunities to grow and develop—not to mention help more clients.

e boundary between “belief in the process” and “denial of reality” is remarkably fuzzy. Hope is a significant contributor to outcome—accounting for as much as 30% of the variance in results. At the same time, it becomes toxic when actual outcomes are distorted in a manner that causes practitioners to miss important opportunities to grow and develop—not to mention help more clients.

.jpg)