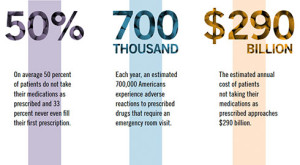

Medication adherence is a BIG problem. According to recent research, nearly one-third of the prescriptions written are never filled. Other data document that more than 60% of people who actually go the pharmacy and get the drug, do not take it as prescribed.

What’s the problem, you may ask? Inefficiency aside, the health risks are staggering. Consider, for example, that the prescriptions least likely to be filled are those aimed at treating headache (51 percent), heart disease (51.3 percent), and depression (36.8)percent).

When cost is factored into the equation, the impact of the problem on an already overburdened healthcare system becomes even more obvious. Research indicates that not taking the medicines costs an estimated $290 billion dollars per year–or nearly $1000 for every man, woman, and child living in the United States. It’s not hard to imagine more useful ways such money could be spent.

What can be done?

Enter Dr. Jan Pringle, director of the Program Evaluation Research Unit, and Professor of Pharmacy and Therapeutics at the University of Pittsburgh. As I blogged about back in 2009, Jan and I met at a workshop I did on feedback-informed treatment (FIT) in Pittsburgh. Shortly thereafter, she went to work training pharmacists working in a community pharmacy to use the Session Rating Scale ([SRS] a four-item measure of the therapeutic alliance) in their encounters with customers.

It wasn’t long before Jan had results. Her first study found that administering and discussing the SRS at the time medications were dispensed resulted in significantly improved adherence (you can read the complete study below).

She didn’t stop there, however.

Just a few weeks ago, Jan forwarded the results from a much larger study, one involving 600 pharmacists and nearly 60,000 patients (via a special arrangement with the publisher, the entire study is available by clicking the link on her publications page of the University website).

Suffice it to say that using the measures, in combination with a brief interview between pharmacist and patient, significantly improved adherence across five medication classes aimed at treating chronic health conditions (e.g., calcium channel blockers, oral diabetes medications, beta-blockers, statins, and renin angiotemsin system antagonists). In addition to the obvious health benefits, the study also documented significant cost reductions. She estimates that using the brief, easy-to-use tools would result in an annual savings of $1.4 million for any insurer/payer covering at least 10,000 lives!

Prior to Jan’s research, the evidence-base for the ORS and SRS was focused exclusively on behavioral health services. These two studies point to exciting possibilities for using feedback to improve the effectiveness and efficiency of healthcare in general.

The tools used in the pharmacy research have been reviewed and deemed evidence-based by the Substance Abuse and Mental Health Services Administration.

Known as PCOMS, detailed information about the measures and feedback process can be found at www.whatispcoms.com. It’s easy to get started and the measures are free for individual healthcare practitioners!

Known as PCOMS, detailed information about the measures and feedback process can be found at www.whatispcoms.com. It’s easy to get started and the measures are free for individual healthcare practitioners!

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)