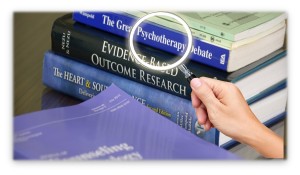

It’s a complaint I’ve heard from the earliest days of my career. Therapists do not read the research. I often mentioned it when teaching workshops around the globe.

“How do we know?” I would jokingly ask, and then quickly answer, “Research, of course!”

Like people living before the development of the printing press who were dependent on priests and “The Church” to read and interpret the Bible, I’ve long expressed concern about practitioners being dependent on researchers to tell them how to work.

- I advised reading the research, encouraging therapists who were skittish to skip the methodology and statistics and cut straight to the discussion section.

- I taught courses/workshops specifically aimed at helping therapists understand and digest research findings.

- I’ve published research on my own work despite not being employed by a university or receiving grant funding.

- I’ve been careful to read available studies and cite the appropriate research in my presentations and writing

I was naïve.

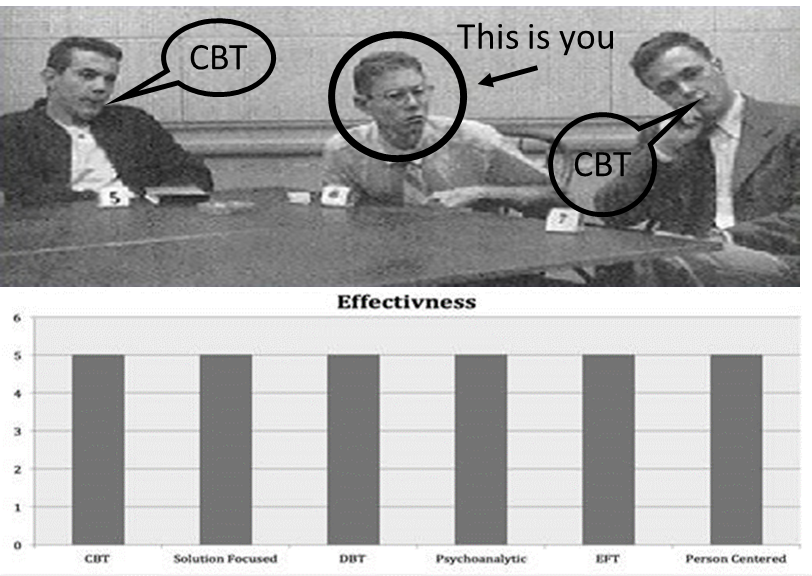

To begin, the “research-industrial complex” – to paraphrase American president Dwight D. Eisenhower – had tremendous power and influence despite often being unreflective of and disconnected from the realities of actual clinical practice. The dominance of CBT (and its many offshoots) in practice and policy, and reimbursement is a good example. In some parts of the world, government and other payers restrict training and reimbursement in any other modality – this despite no evidence CBT has led to improved results and, as documented previously on my blog, data documenting such restrictions lead to poorer outcomes.

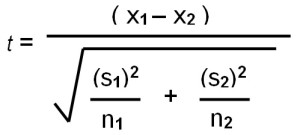

More to the point, since I first entered the field, research has become much harder to read and understand.

How do we know? Research!

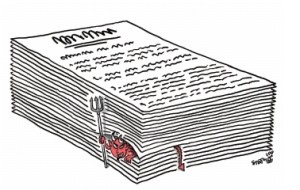

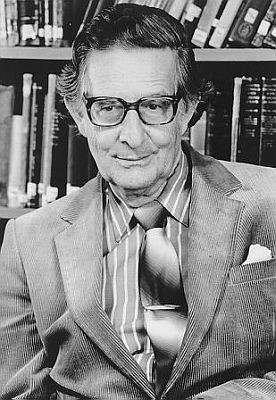

Sociologist David Hayes wrote about this trend in Nature more than 30 years ago, arguing it constituted “a threat to an essential characteristic of the endeavor – its openness to outside examination and appraisal” (p. 746).

I’ve been on the receiving end of what Haye’s warned about long ago. Good scientists can disagree. Indeed, I welcome and have benefited from critical feedback provided when my work is peer-reviewed. At the same time, to be helpful, the person reviewing the work must know the relevant literature and methods employed. And yet, the ever-growing complexity of research severely limits the pool of “peers” able to understand and comment usefully, or – as I’ve also experienced – to those whose work directly competes with one’s own.

Still, as Hayes notes, the far greater threat is the lack of openness and transparency resulting from scientists’ inability to communicate their findings in a way that others can understand and independently appraise. Popular internet memes like, “I believe in science,” “stay in your lane,” and “if you disagree with a scientist, you are wrong,” are examples of the problem, not the solution. Beliefs are the province of religion, politics and policy. The challenge is to understand the strengths and limitations of the methodology and results of the process called science — especially given the growing inaccessibility of science, even to scientists.

Continuing with “business as usual” — approaching science as a “faith” versus evidence-based activity — is a vanity we can ill afford.

Until next time,

Scott

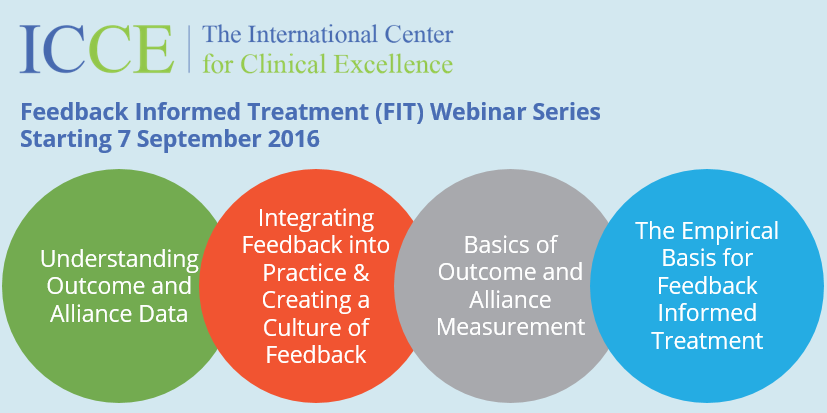

Director, International Center for Clinical Excellence

ose not to go to psychotherapy because they are busy doing something else–consulting psychics, mediums, and other spiritual advisers–forms of healing that are a better fit with their beliefs, that “sing to their souls.”

ose not to go to psychotherapy because they are busy doing something else–consulting psychics, mediums, and other spiritual advisers–forms of healing that are a better fit with their beliefs, that “sing to their souls.”

between psychologist Eugene Landy and his famous client, Beach Boy Brian Wilson. In a word, it was disturbing. The psychologist did “24-hour-a-day” therapy, as he termed it, living full time with the singer-songwriter, keeping Wilson isolated from family and friends, and on a steady dose of psychotropic drugs while simultaneously taking ownership of Wilson’s songs, and charging $430,000 in fees annually. Eventually, the State of California intervened, forcing the psychologist to surrender his license to practice. As egregious as the behavior of this practitioner was, the problem of deterioration in psychotherapy goes beyond the field’s “bad apples.”

between psychologist Eugene Landy and his famous client, Beach Boy Brian Wilson. In a word, it was disturbing. The psychologist did “24-hour-a-day” therapy, as he termed it, living full time with the singer-songwriter, keeping Wilson isolated from family and friends, and on a steady dose of psychotropic drugs while simultaneously taking ownership of Wilson’s songs, and charging $430,000 in fees annually. Eventually, the State of California intervened, forcing the psychologist to surrender his license to practice. As egregious as the behavior of this practitioner was, the problem of deterioration in psychotherapy goes beyond the field’s “bad apples.”

Norwegian psychologist Jørgen A. Flor just completed a study on the subject. We’ve been corresponding for a number of year as he worked on the project. Given the limited information available, I was interested.

Norwegian psychologist Jørgen A. Flor just completed a study on the subject. We’ve been corresponding for a number of year as he worked on the project. Given the limited information available, I was interested.

ment still popular today; namely, that by including studies of varying (read: poor) quality, Smith and Glass OVERESTIMATED the effectiveness of psychotherapy. Were such studies excluded, they contended, the results would most certainly be different and behavior therapy—Eysenck’s preferred method—would once again prove superior.

ment still popular today; namely, that by including studies of varying (read: poor) quality, Smith and Glass OVERESTIMATED the effectiveness of psychotherapy. Were such studies excluded, they contended, the results would most certainly be different and behavior therapy—Eysenck’s preferred method—would once again prove superior.

ave not.

ave not.

ing Psychology.

ing Psychology.

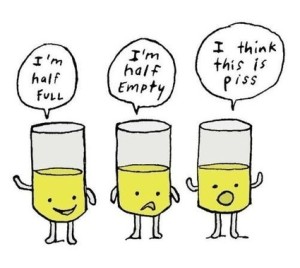

e boundary between “belief in the process” and “denial of reality” is remarkably fuzzy. Hope is a significant contributor to outcome—accounting for as much as 30% of the variance in results. At the same time, it becomes toxic when actual outcomes are distorted in a manner that causes practitioners to miss important opportunities to grow and develop—not to mention help more clients.

e boundary between “belief in the process” and “denial of reality” is remarkably fuzzy. Hope is a significant contributor to outcome—accounting for as much as 30% of the variance in results. At the same time, it becomes toxic when actual outcomes are distorted in a manner that causes practitioners to miss important opportunities to grow and develop—not to mention help more clients.