OK, this post may not be for everyone. I’m hoping to “go beyond the headlines,” “dig deep,” and cover a subject essential to research on the effectiveness of psychotherapy. So, if you fit point #2 in the definition above, read on.

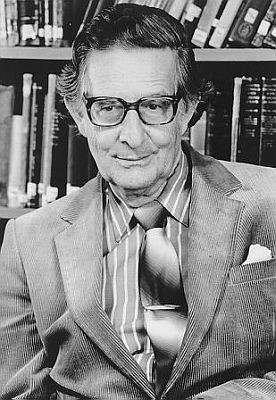

It’s easy to forget the revolution that took place in the field of psychotherapy a mere 40 years ago. At that time, the efficacy of psychotherapy was in serious question. As I posted last week, psychologist Hans Eysenck (1952, 1961, 1966) had published a review of studies purporting to show that psychotherapy was not only ineffective, but potentially harmful. Proponents of psychotherapy responded with the own reviews (c.f., Bergin, 1971). Back and forth each side went, arguing their respective positions–that is, until Mary Lee Smith and Gene Glass (19

77) published the first meta-analysis of psychotherapy outcome studies.

Their original analysis of 375 studies showed psychotherapy to be remarkably beneficial. As I’ve said here, and frequently on my blog, they found that the average treated client was better off than 80% of people with similar problems were untreated.

Eysenck and other critics (1978, 1984; Rachman and Wilson 1980) immediately complained about the use of meta-analysis, using an argu ment still popular today; namely, that by including studies of varying (read: poor) quality, Smith and Glass OVERESTIMATED the effectiveness of psychotherapy. Were such studies excluded, they contended, the results would most certainly be different and behavior therapy—Eysenck’s preferred method—would once again prove superior.

ment still popular today; namely, that by including studies of varying (read: poor) quality, Smith and Glass OVERESTIMATED the effectiveness of psychotherapy. Were such studies excluded, they contended, the results would most certainly be different and behavior therapy—Eysenck’s preferred method—would once again prove superior.

For Smith and Glass, such claims were not a matter of polemics, but rather empirical questions serious scientists could test—with meta-analysis, of course.

For Smith and Glass, such claims were not a matter of polemics, but rather empirical questions serious scientists could test—with meta-analysis, of course.

So, what did they do? Smith and Glass rated the quality of all outcome studies with specific criteria and multiple raters. And what did they find? The better and more tightly controlled studies were, the more effective psychotherapy proved to be. Studies of low, medium, and high internal validity, for example, had effect sizes of .78, .78, and .88, respectively. Other meta-analyses followed, using slightly different samples, with similar results: the tighter the study, the more effective psychotherapy proved to be.

Importantly, the figures reported by Smith and Glass have stood the test of time. Indeed, the most recent meta-analyses provide estimates of the effectiveness of psychotherapy that are nearly identical to those generated in Smith and Glass’s original study. More, use of their pioneering method has exploded, becoming THE standard method for aggregating and understanding results from studies in education, psychology, and medicine.

As psychologist Sheldon Kopp (1973) was fond of saying, “All solutions breed new problems.” Over the last two decades the number of meta-analyses of psychotherapy research has exploded. In fact, there are now more meta-analyses than there were studies of psychotherapy at the time of Smith and Glass’s original research. The result is that it’s become exceedingly challenging to understand and integrate information generated by such studies into a larger gestalt about the effectiveness of psychotherapy.

Last week, for example, I posted results from the original Smith and Glass study on Facebook and Twitter—in particular, their finding that better controlled studies resulted in higher effect sizes. Immediately, a colleague responded, citing a new meta-analysis, “Usually, it’s the other way around…” and “More contemporary studies find that better methodology is associated with lower effect sizes.”

It’s a good idea to read this study, closely. If you just read the “headline”–“The Effect of Psychotherapy for Adult Depression are Overestimated–or skip the method’s section and read the author’s conclusions, you might be tempted to conclude that better designed studies produce smaller effects (in this particular study, in the case of depression). In fact, what the study actually says is that better designed studies will find smaller differences when a manualized therapy is compared to a credible alternative! Said another way, differences between a particular psychotherapy approach and an alternative (e.g., counseling, usual care, or placebo), are likely to be greater when the study is of poor quality.

It’s a good idea to read this study, closely. If you just read the “headline”–“The Effect of Psychotherapy for Adult Depression are Overestimated–or skip the method’s section and read the author’s conclusions, you might be tempted to conclude that better designed studies produce smaller effects (in this particular study, in the case of depression). In fact, what the study actually says is that better designed studies will find smaller differences when a manualized therapy is compared to a credible alternative! Said another way, differences between a particular psychotherapy approach and an alternative (e.g., counseling, usual care, or placebo), are likely to be greater when the study is of poor quality.

What can we conclude? Just because a study is more recent, does not mean it’s better, or more informative. The important question one must consider is, “What is being compared?” For the most part, Smith and Glass analyzed studies in which psychotherapy was compared to no treatment. The study cited by my colleague, demonstrates what I, and others (e.g., Wampold, Imel, Lambert, Norcross, etc.) have long argued: few if any differences will be found between approaches.

The implications for research and practice are clear. For therapists, find an approach that fits you and benefits your clients. Make sure it works by routinely seeking feedback from those you serve. For researchers, stop wasting time and precious resources on clinical trials. Such studies, as Wampold and Imel so eloquently put it, “seemed not to have added much clinically or scientifically (other than to further reinforce the conclusion that there are no differences between treatments), [and come] at a cost…” (p. 268).

Until next time,

Scott D. Miller, Ph.D.

Director, International Center for Clinical Excellence

Thank you for this insightful analysis. It offers an argument to use with those in our health care organization who require us to limit our treatment to CBT because the insurance industry demands it.

Couldn’t agree more Mr. Miller! Preach it-I know that’s what I teach my graduate students at Hope International University based on your and Wampold, Duncan, and Hubble ‘ s findings. Very much appreciate your contributions to our field and to the practice of psychotherapy!

Love it! Everything you write is so illuminating. Thank you.

Thanks Scott. What a relief to start to focus on what will benefit esch individual client instead of standiong around debating the colour of the sand, the snow, the sky! We can get on the the work of being useful instead of being right.

Thanks for an interesting read. And thanks for including me in your mailing.

I wonder if, considering that training doesn’t matter as much as people claim and most approaches are effective, maybe therapists should not be paid as much? Perhaps volunteers with some basic counseling training could do just as good of a job?

(In my prior comment, My email address was incorrect. Here again are my remarks. – KM)

Dear Dr. Miller:

Thank you for this thoughtful review. I feel sure your comments about choice of ‘approach’ are meant to apply to therapist ‘theoretic orientation’ rather than disorder-specific or problem-specific, empirically supported interventions. The former concept has always been a concerning one, for me. We need to be conversant in many theories, yet avoid ‘identifing with’ any one of them. That can lead to over-sing one ‘approach’ where another is more-indicated by presenting functional problems, client concerns or distress, medical findings and sometimes, yes, even our own psychological diagnoses. That mcn being said, of course you will be well aware that most such disorder-specific therapies can be cautiously and often helpfully adapted within a transdiagnostic framework. But perhaps your comments would be clearer if you could contextualize them in this regard. I feel sure you do not intend that, neither we as psychologists, nor NIMH, nor other nations’ research funding agencies, ‘just drop the research thing already.’ If nothing else, we know that early identification/diagnosis and accurate, early intervention raises lifespan trajectories across a wide range of risks. Your comments suggest to me that your perspective may not yet be integral with current developmental science and its foundations in probabilistic empiricism. Again, thank you otherwise for the thoughtful review.

Does your conclusion, the importance of ‘personal, therapist fit’ and ‘client benefit’, take into consideration the possibility ( maybe more than just a possibility considering empirical evidence) that there are certain, specific problems, e.g. OCD, for which there seems to be evidence that it does make a difference which approach one uses ( as far as iI know no evidence for psychodynamic approaches and quite some evidence for CBT)?

Well summarized as always, Scott! Thanks!

So it is the case that “everyone has won and all must have prizes” thereby concluding the Dodo Bird Verdict debate. But don’t you find this intellectually disturbing? If all these “bona fide” therapies are equally effective, how do we know that it is actually the therapies themselves that are effective rather than, say, just sounding smart and confident?