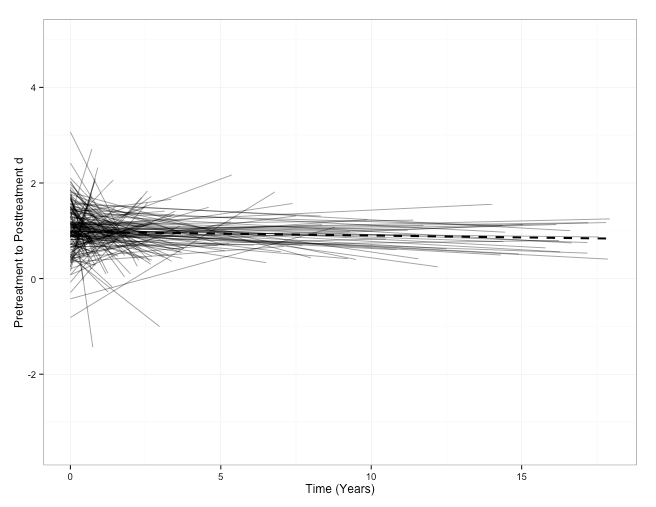

Take a look at the figure to the right.

It’s data taken from the largest study conducted in the history of psychotherapy research examining the relationship between experience and effectiveness.

It’s data taken from the largest study conducted in the history of psychotherapy research examining the relationship between experience and effectiveness.

Each of the smaller lines represents the outcomes of an individual practitioner followed, in some cases, over a 17-year period. The single, thicker, dashed-line plots the average across all, 170 practitioners in total–a group that measured the results of their work at every visit, with every client.

The data confirm what a host of correlational studies have hinted at since the mid-1980’s: in general, clinician effectiveness declines with time and experience.

It’s not a steep slope, to be sure. It is slow and gradual, like a leak in a bicycle tire. Most problematically, other research shows, it’s also imperceptible. Indeed, as experience in the field grows, clinician-confidence increases, leading most to see themselves as more effective than their results actually indicate.

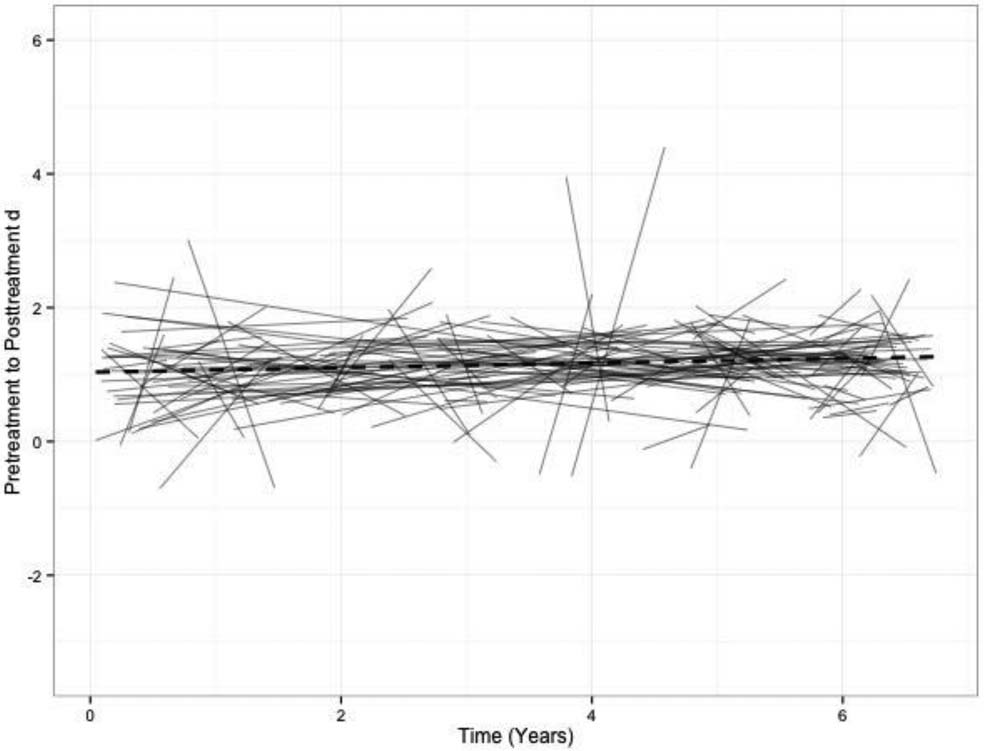

Now, consider the second figure. Once again, outcomes of individual practitioners are plotted.

Here, however, the slope is positive. In other words, therapists are becoming more and more effective over time. As before, the change is slow and gradual. Said another way, there are no shortcuts to improved outcomes. Slow and steady wins the race. More, unlike the prior results, therapists are both aware of their growth and, curiously, less confident about the effectiveness of their work.

Importantly, the data from the latter are not drawn from the application of some hypothetical, yet-to-be-hoped-for treatment model or training scenario. Indeed, they are from the only published study to date documenting the factors that actually influence development of individual therapist effectiveness. When employed purposefully and mindfully, clinicians improve three times larger than they were documented to decline in the prior study.

What are these factors?

As simple as it sounds: (1) measure your results; and (2) focus on your mistakes.

There is no way around this basic fact: any effort aimed at improving effectiveness begins with a valid and reliable estimate of one’s current outcomes. Without that, there is no way of knowing when progress is being made or not.

On the subject of mistakes, several studies now confirm that “healthy self-criticism,” or professional self-doubt (PSD), is a strong predictor of both alliance and outcome in psychotherapy (Nissen-Lie et al., 2015). Not surprisingly, therapist effectiveness improves in practice environments that provide: (1) ample opportunity and a safe place for discussing cases that are not making progress (or deteriorating); and (2) concrete suggestions for improvement that are tailored to the individual therapist.

Here are a couple of evidence-based resources you can tap in your efforts to improve your effectiveness in 2017:

- Begin measuring your results using two simple scales that have been tested in diverse settings and with a wide range of treatment populations. In 2013, they were approved by the Substance Abuse and Mental Health Services Administration (SAMHSA) and listed on the National Registry of Evidence-based Programs and Practices. Get them for free by registering for a free license here.

- If you work alone or need a error-friendly practice community to discuss your work, join the International Center for Clinical Excellence. It’s our free, online community. There, you can link up with other like-minded clinicians, share your work, discuss your outcomes, watch “how-to” videos, help and be helped by practitioners around the world.